IOTA: A Problem-Solving Framework of Distributed Machine Intelligence

Jun 8, 2018, 5:22PM by Martin Banov

by Martin Banov

An overview of the data analysis and data coordination methods employed by IOTA in merging together distributed ledgers and machine learning.

This is the second article in a series of 3 exploring the roots, the applications and the possibilities of IOTA. The first article presented unique computing processes such as tangle and ternary used by IOTA. The third article explores the Qubic protocol.

IOTA's tangle is unique among distributed ledger models in that instead of being grounded in a universal order around a canonical blockchain, it forms a flexible architecture capable of organizing itself around estimative probabilities. This affords a training-based data-driven coordination ideally suited to unleashing machine learning technologies. In the tangle, machines can train themselves to better execute operations, while blockchains are, in essence, blind, deaf, and dumb in that regard.

IOTA's tangle resembles a rudimentary neural network that propagates transactions, circulates data and allows for various ways in which data can map out meaningful cohesion. The tangle, in that sense, can be understood as a general framework for advancing Artificial Intelligence. (In a way, what open source is to software, IOTA is to Artificial Intelligence).

The methods of statistical inference that IOTA employs are constantly at work under the hood in platforms like Facebook, as well as Google's search engine and its suite of integrated applications. IOTA, however, leverages that technology in an effort to lay the foundations for a machine economy that reflects the dynamics of objective reality in the physical realm of motion and change.

Bayesian Probability: Aligning Perception With Reality And The Virtual With The Actual

The value of information does not survive the moment in which it was new. It lives only at the moment. It has to surrender to it completely and explain itself to it without losing any time. – Walter Benjamin

The Bayesian methods that IOTA makes use of have a long history of application in practice across many different domains and fields of inquiry (from insurance, risk assessment, and business management, to game development, drug discovery, and epidemiology, to name a few). As a powerful reasoning tool, when applied in the realm of machine learning, Bayesian probability heuristics empower machines and programs to efficiently handle missing data, extract information from limited datasets and estimate often remarkable predictions given partial information.

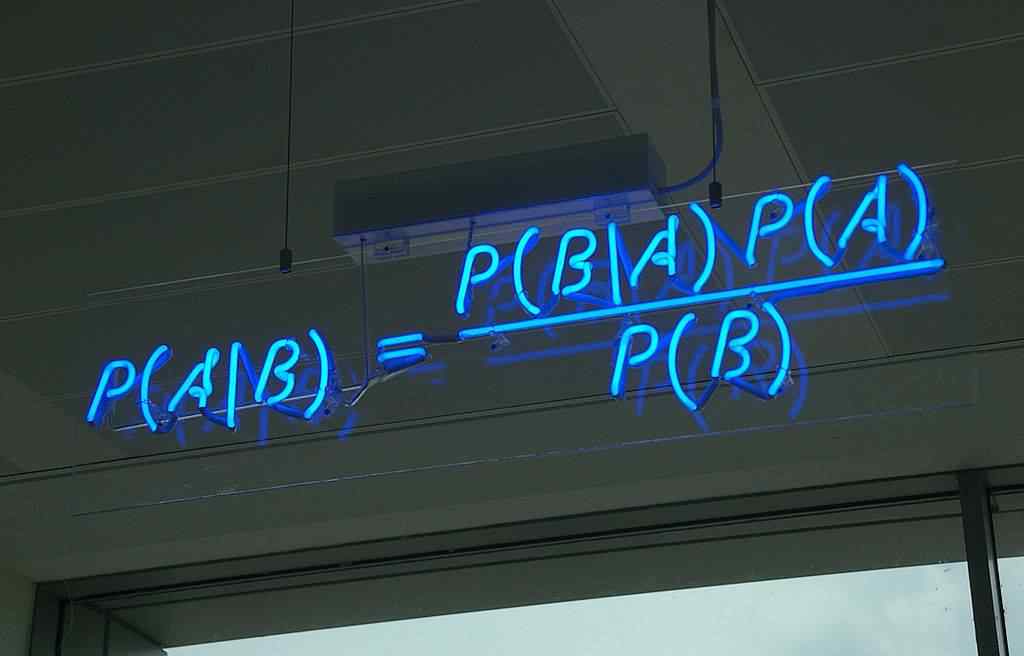

The basic intuition of Bayesian reasoning is succinctly captured in the Bayes theorem. The formula below is a seemingly straightforward heuristic for making educated guesses by quantifying beliefs based on available information and previous knowledge of the conditions that might relate to the phenomenon in question.

Bayes Theorom.

The equation describes the probability of A given the validity of B. P(A) and P(B) are what are known as the marginal probabilities of events A and B independent of each other and P(B|A) designates the conditional probability of B assuming A. We assign distributions to everything in the formula and proceed to infer about the event we're interested in.

A commonly given example of the Bayes theorem in practice is the false positives that occur in clinical diagnostics. Let's consider a drug test that is 99% sensitive to the drug it tests for and 99% specific - meaning, it is to produce 99% true positives for drug users and 99% true negatives for non-drug users.

But let's also take into account that roughly 0.5% of individuals in the population tested are actually users of that specific drug, so then factoring all these priors using the Bayes theorem, what would the probability that a randomly selected individual with a positive test result is actually a drug user? Following the formula, we'd arrive at 0.99 x 0.005 / 0.99 x 0.005 + 0.01 x 0.995, which averages at 33% probability that that person tested might actually be a drug user and 67% likelihood that he is not.

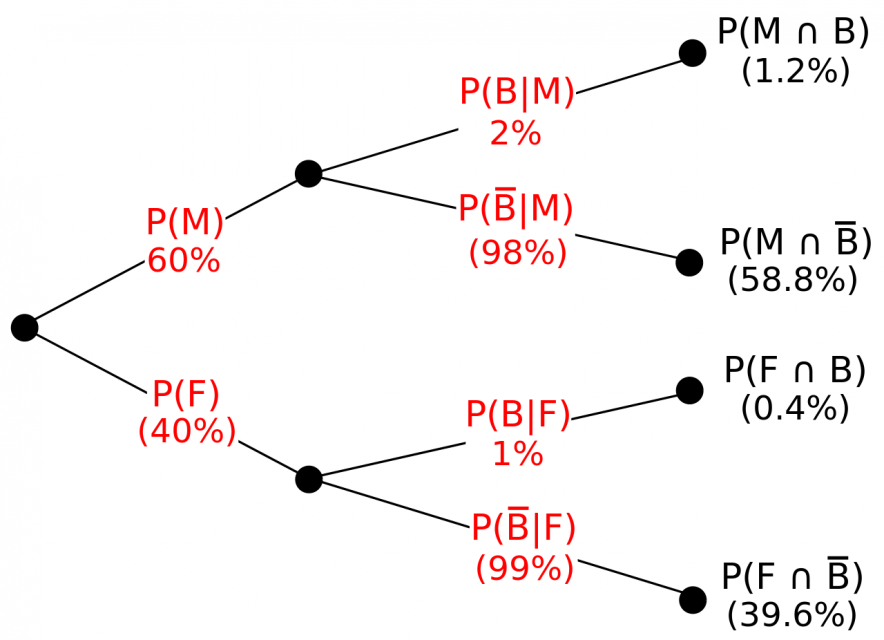

A diagram of step-wise Bayesian inference structured as a DAG.

In Bayesian interpretations, probability measures a “degree of belief.” Bayes’ theorem then links the degrees of belief in a proposition before and after accounting for the objective evidence which is not known in advance. And then, with the updated knowledge, it proceeds further in what is known as Markov chains, getting increasingly more accurate at each step as it absorbs new information and probability estimates about its surroundings at the point in time.

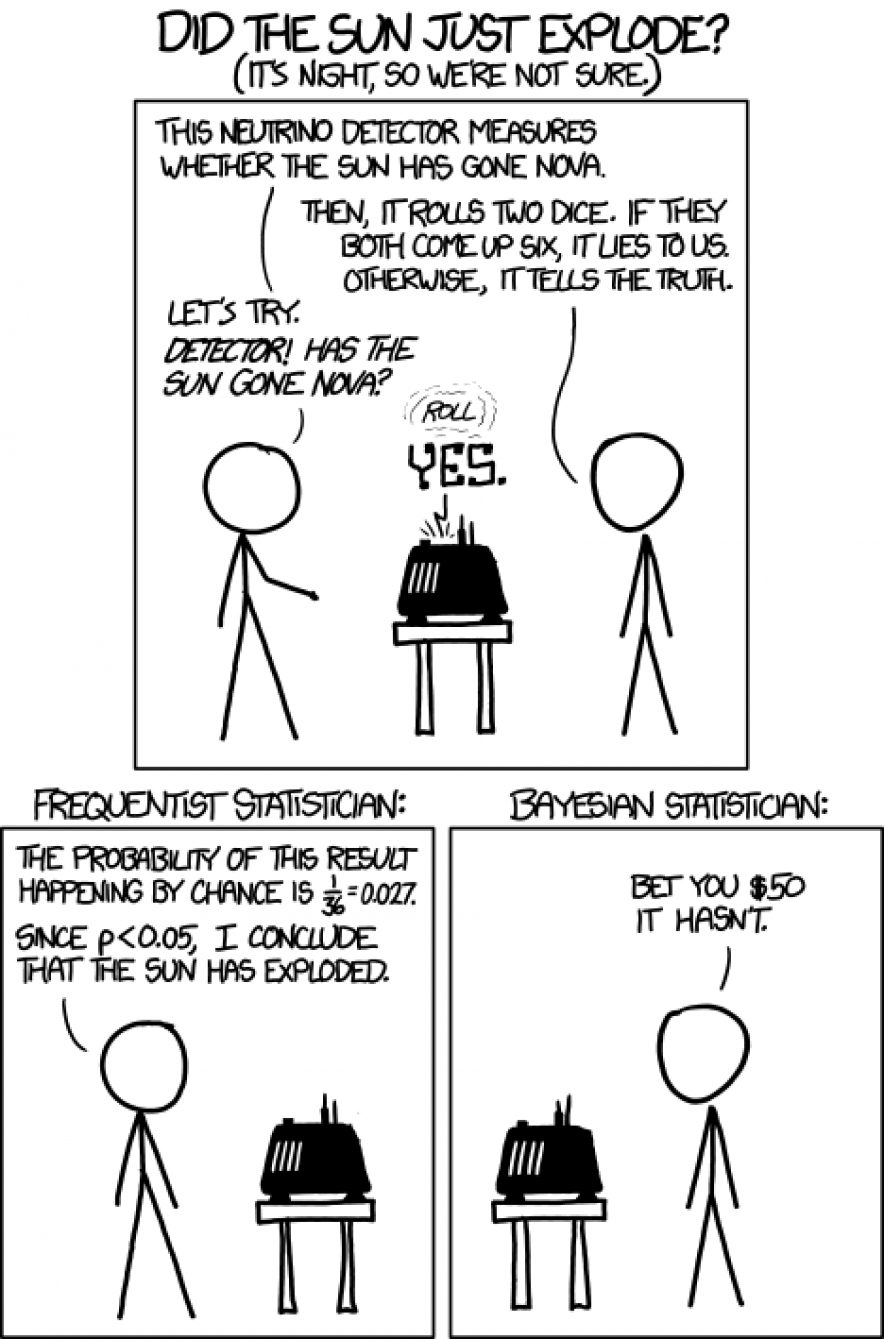

The line "Bet you $50 it hasn't" is a reference to Bayesian scholar Bruno de Finetti who extensively used bets in his thought experiments and examples. Source: xkcd.com

The Bayesian framework of inference is considered to be extremely useful (if sometimes puzzling and occasionally regarded as something of a mysterious voodoo magic) in solving complex problems with large inherent uncertainty that needs to be quantified, and are thought to be the most information efficient methods to fit any statistical model (as well as the most computationally intensive, the economics of which IOTA tackles on the level of hardware).

Stochastic Processes, Random Walkers, and Probability Trajectories

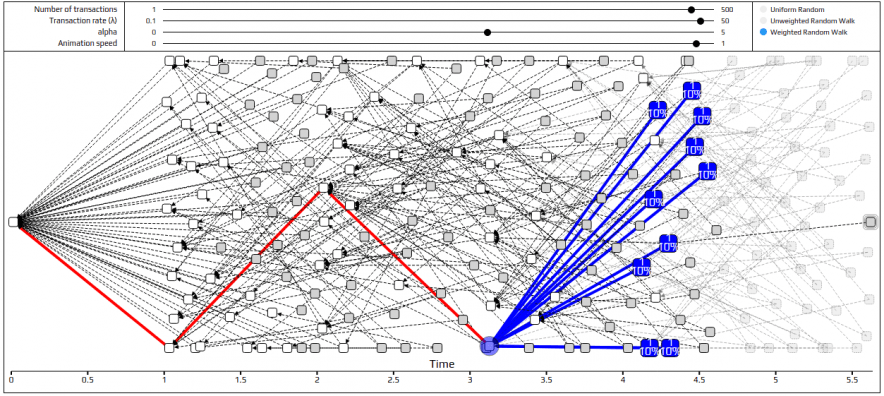

The tangle is a DAG-valued stochastic process (a term for random) designed to integrate and deal with real-world complexities, which is why transactions on the tangle are not evenly spread across time, but structure a setting accommodative of different regimes and modes of activity. A stochastic process is essentially a generator of random samples and random walks are mathematical objects that describe sequences of random steps. IOTA's tip selection algorithms are biased (weighted) random walks on the surface of the tangle, which follow a path of approximate certainty.

A simulation of the tangle, given parameters of the number of transactions, the rate at which they arrive, the degree of randomness, and the type of random walk used for connecting incoming transactions from genesis to tip.

The horizon conditioned by the tangle affords for the application of a plethora of out-of-the-box algorithms that can optimize for a wide spectrum of strategies and possible circumstances of application.

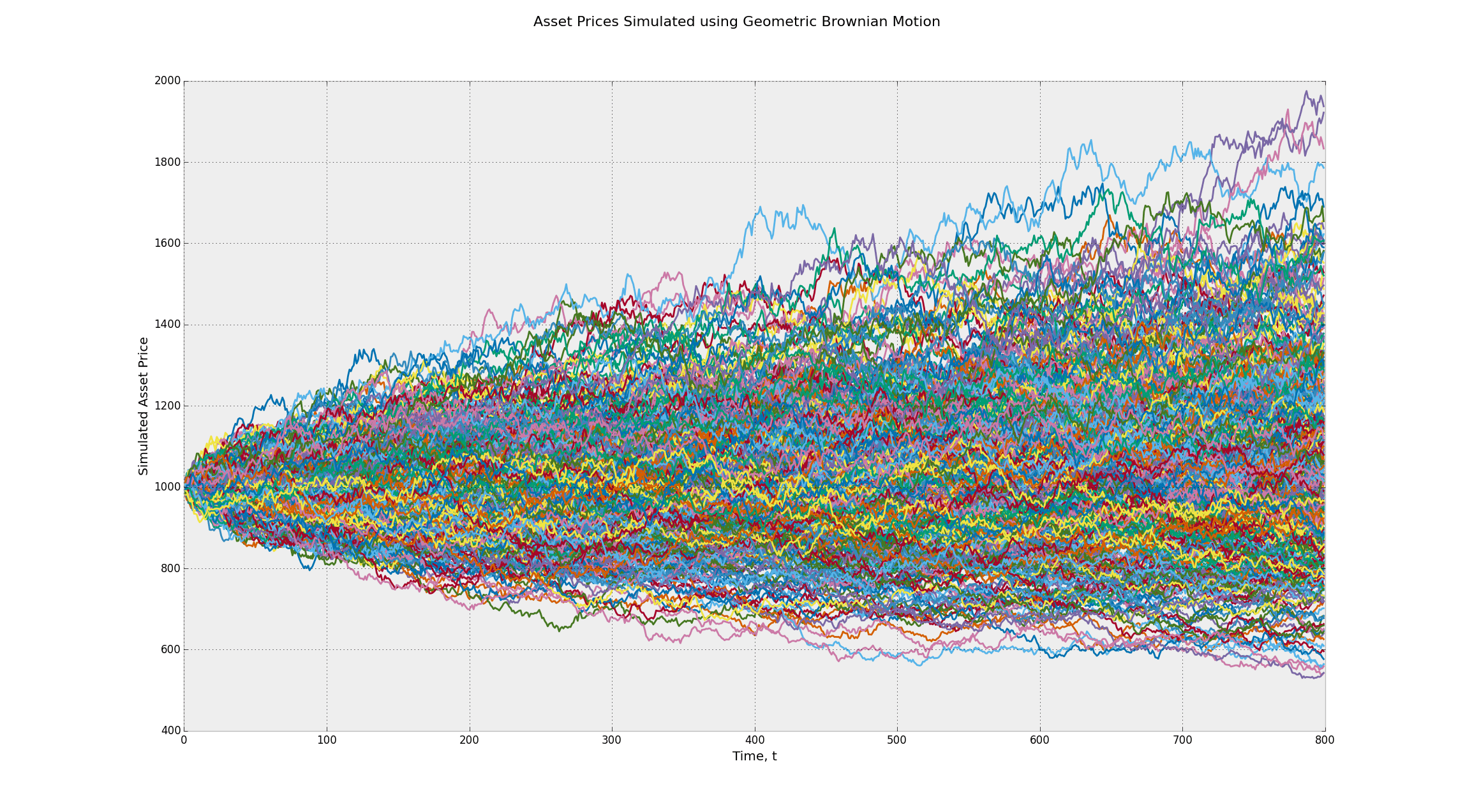

A stochastic process.

Markov Chains

"When the Facts Change, I Change My Mind. What Do You Do, Sir?" -- Winston Churchill

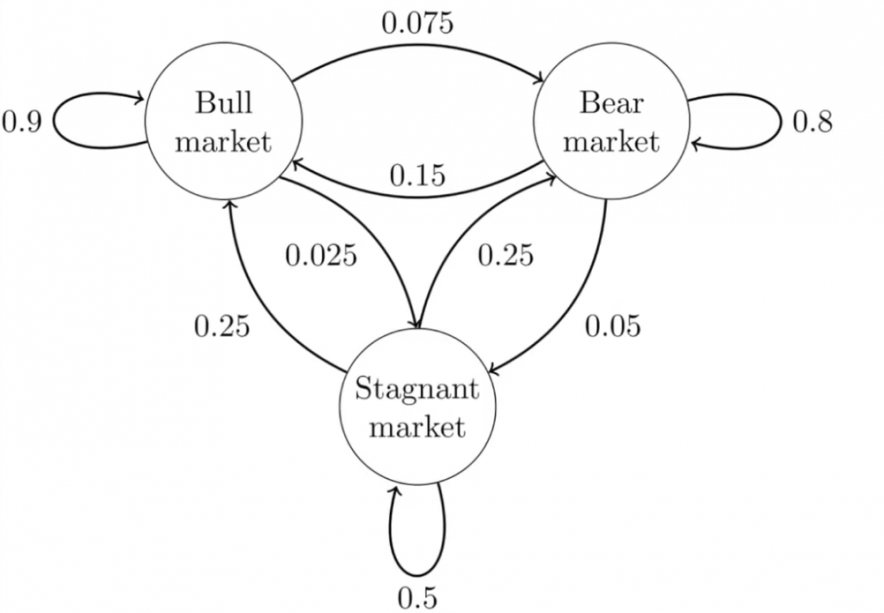

Markov chains are a continuous form of Bayesian inference that map out trajectories of probability by integrating new information at every step, accounting for how the system might behave next before proceeding further. Essentially, Markov chains are random walks on a graph (DAG) that satisfy some specified acceptance rules.

Markov chains are usually represented as state transition diagrams with attached probabilities.

Markov chains are defined by states and transitions between them and the intuition is such that if observations are carried out long enough, as the number of trails increase, they'll eventually converge towards the approximate equilibrium of the unknown ratios they are biased towards revealing.

Monte Carlo Methods

An important contribution of IOTA to the province of DLT is the family of Markov Chain Monte Carlo algorithms it proposes in the framework of a distributed ledger. Markov Chain Monte Carlo are considered to be the only methods guaranteed to solve a problem (given sufficient resource and enough time to do so) and are generally used to sample from and compute expectations with respect to complicated, multi-variate and high-dimensional probability distributions where no other methods are practical or adequately suited.

The purpose of MCMCs in the IOTA protocol is to choose attachment sites for a tip transaction and follow its validations to a certain depth of acceptable certainty in traversing the correlated chains of the graph history. For example, a seller receiving a payment can set an acceptable level of a risk he's willing to accept in a given situation. This provides a highly robust mechanism for liability management and calculating risk estimations in any given circumstance involving statistical estimates and can be extrapolated to all kinds of scenarios depending on the kind of structured data in question.

Weight Matrices: Own Weight, Cumulative Weight, and Minimum Weight Magnitude

As already mentioned, weights are a key metric in how associative connections form in neural networks and weights on the tangle are what the random walks are biased to sway towards. Weights determine the activity of a node in validating transactions and the function of the coordinator presently in place is to provide the training wheels that eventually enable the network to deal with malicious double-spending instances on its own.

There are three kinds of weights in IOTA: own weight, cumulative weight and minimum weight magnitude (MWM). Every transaction has its own weight that can have values 1, 3, 9 and etc. 3 to the power of n (as befitting the balanced ternary nomenclature). Transactions issued by nodes constitute the site set of the tangle graph that forms the ledger (the wallet being simply a node interface to the tangle) and the weight is determined by the efforts made by the transaction's issuing node.

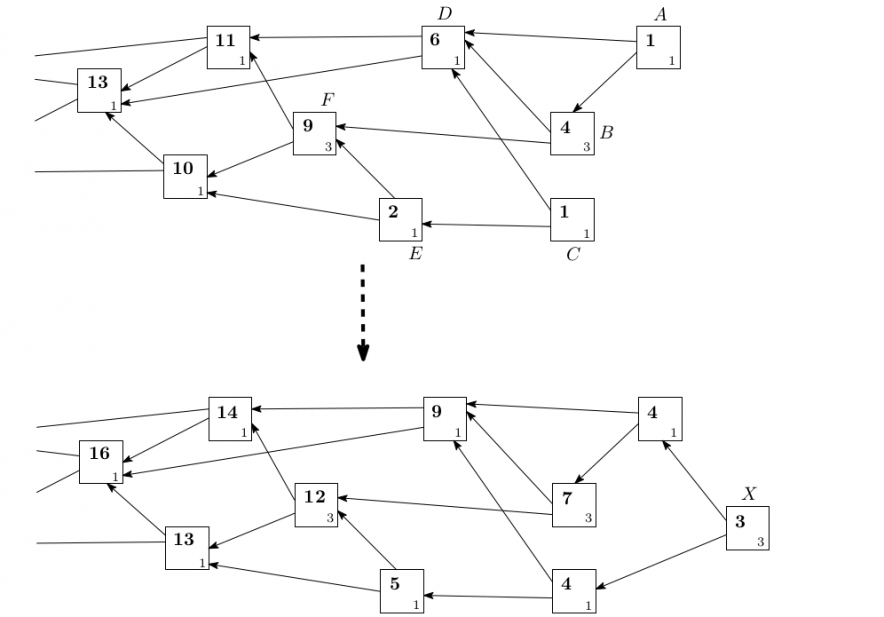

A DAG with weight assignments before and after a newly issued transaction, X. The boxes represent transactions, the small number in the corners of each box denotes own weight, and the bold number on top denotes the cumulative weight. Source: IOTA white paper.

As it propagates through IOTA’s network, a transaction’s weight increases with the number of approvals. As arriving transaction must perform Proof-of-Work in solving and validating two previous transactions (referred respectively as trunk and branch, or has approved and is approved by), using the weighted random walk algorithm eventually one of the branches will grow larger and form stronger consensus around it.

The cumulative weight, on the other hand, is a transaction's own weight plus the total sum of all weights of all transactions that directly or indirectly approve it. The cumulative weight is an important metric for transactions on their way to network approval and the strategy for choosing which tips to approve is of key significance and a question of calibrating the bias so that while it disincentivizes lazy behavior, at the same it doesn't leave tips behind unapproved.

This, again resembles the way neurons form connections and transmit signals, as the activation of a neuron depends on the sum of the activations of other neurons multiplied by its weight to them, and when that sum reaches a threshold it fires an action potential that propagates the signal further.

Nash Equilibrium: Rationality Without Enforcement

Decision making is an aspect implicit to the functioning of every dynamic system of interactive relations and a Nash equilibrium is a concept in game theory characterizing a state in which every actor has optimized his strategy in alignment with all the other participants' choices. Essentially, a Nash equilibrium is a law of self-evidence and pragmatism, which doesn't require policing even in the absence of enforceable mechanisms. (For example, it makes sense for everybody to follow traffic light signals).

A paper entitled "Equilibria in the Tangle" from March 8th, 2018, proves the existence of a Nash equilibrium on the tangle in produced simulations where it is demonstrated that even though there are no strict (but only approximate) rules for choosing transactions, when a large number of nodes follow some reference rule, it is to the benefit of any such node to stick to a rule of the same kind ("selfish" participants will cooperate with their tangle as there is no incentive to deviate).

This makes sense in the context of IOTA and the overall logic of the system where users are seen as the devices they interface with and as such a byproduct of the network itself. These premises create an environment that is collaborative in nature, as users contribute to the stability and security of the network simply by using it.

Conclusion

Unlike other forms of distributed ledgers, IOTA's tangle deals with probabilities and avoids the pitfalls of capture and centralization by empowering the network and its utility role of optimizing weighted solutions, rather than any given privileged party, global solution or ideological inclination.

This is the second article in a series of 3 exploring the roots, the applications and the possibilities of IOTA. The first article presented unique computing processes such as tangle and ternary used by IOTA. The third article explores the Qubic protocol.

Disclaimer: information contained herein is provided without considering your personal circumstances, therefore should not be construed as financial advice, investment recommendation or an offer of, or solicitation for, any transactions in cryptocurrencies.